本站文章除注明转载外,均为本站原创: 转载自love wife & love life —Roger 的Oracle技术博客

本文链接地址: 数套ASM RAC的恢复案例

前不久帮助某客户恢复了6套Oracle RAC,均为ASM,而且版本均为10.2.0.4。熬夜好几天,差点吐血了。

这里以其中一套库的恢复进行简单说明,跟大家分享。

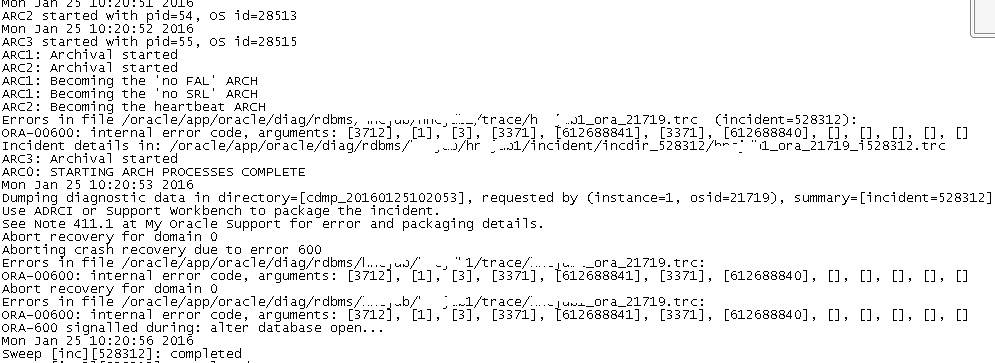

其中几套基本上都遇到了如下的ORA-00600 错误:

Thu Dec 31 11:55:46 2015 SUCCESS: diskgroup DG1 was mounted Thu Dec 31 11:55:50 2015 Errors in file /oracle/admin/xxx/udump/xxx1_ora_28803.trc: ORA-00600: internal error code, arguments: [kccpb_sanity_check_2], [13715626], [13715623], [0x000000000], [], [], [], [] SUCCESS: diskgroup DG1 was dismounted Thu Dec 31 11:55:51 2015

对于该错误,其实很简单,主要是因为控制文件损坏,通过重建控制文件或者利用备份的控制文件进行restore即可进行mount;甚至于我们利用控制文件快照都可以进行数据库mount;然后接着进行恢复操作。在恢复的过程中还遇到了如下的错误:

Errors in file /oracle/admin/xxx/udump/xxx1_ora_6990.trc: ORA-00600: internal error code, arguments: [kclchkblk_4], [3431], [18446744072948603858], [3431], [18446744072948586897], [], [], [] Tue Jan 5 10:52:28 2016 Errors in file /oracle/admin/xxx/bdump/xxx1_arc0_8205.trc: ORA-19504: failed to create file "+DG1/xxx/archivelog/1_2_900069464.dbf" ORA-17502: ksfdcre:4 Failed to create file +DG1/xxx/archivelog/1_2_900069464.dbf ORA-00600: internal error code, arguments: [kffbAddBlk04], [], [], [], [], [], [], [] Tue Jan 5 10:52:28 2016 ARC0: Error 19504 Creating archive log file to '+DG1/xxx/archivelog/1_2_900069464.dbf' ARCH: Archival stopped, error occurred. Will continue retrying Tue Jan 5 10:52:30 2016 ORACLE Instance xxx1 - Arc

上述的ORA-00600错误其实很简单,主要是数据块SCN的问题。这里以其中一套库的恢复进行大致说明,因为在恢复该库的过程中,遇到了一件十分神奇的事情。

SQL> startup mount pfile='/tmp/p.ora'; ORACLE instance started. Total System Global Area 2.1475E+10 bytes Fixed Size 2122368 bytes Variable Size 2399145344 bytes Database Buffers 1.9059E+10 bytes Redo Buffers 14651392 bytes Database mounted. SQL> ALTER DATABASE ADD LOGFILE THREAD 2 2 GROUP 3 ( 3 '+DG/xxxx/onlinelog/group_3.271.752099989', 4 '+DG/xxxx/onlinelog/group_3.272.752099991' 5 ) SIZE 100M REUSE, 6 GROUP 4 ( 7 '+DG/xxxx/onlinelog/group_4.273.752099991', 8 '+DG/xxxx/onlinelog/group_4.274.752099993' 9 ) SIZE 100M REUSE, 10 GROUP 6 ( 11 '+DG/xxxx/onlinelog/group_6.275.752099993', 12 '+DG/xxxx/onlinelog/group_6.276.752099993' 13 ) SIZE 100M REUSE; ALTER DATABASE ADD LOGFILE THREAD 2 * ERROR at line 1: ORA-01276: Cannot add file +DG/xxxx/onlinelog/group_3.271.752099989. File has an Oracle Managed Files file name.

由于是ORACLE RAC,因此重建控制文件之后,是需要添加redo logfile的;然而add logfile 发现报上述错误。根据Oracle metalink的一些方法均不能成功,都报上面的错误,确实很怪异。

有些人看上述的错误,可能会认为是设置了OMF的参数,其实这里并不是,我将相关参数全部修改之后,错误依旧。

这里实际上添加logfile时,只写磁盘组名称就行了,不需要写绝对路径。

接着在进行recover后进行open resetlogs打开时,报错ORA-01248,如下:

SQL> startup mount pfile='/tmp/p.ora';

ORACLE instance started.

Total System Global Area 2.1475E+10 bytes

Fixed Size 2122368 bytes

Variable Size 2399145344 bytes

Database Buffers 1.9059E+10 bytes

Redo Buffers 14651392 bytes

Database mounted.

SQL> recover database using backup controlfile until cancel;

ORA-00279: change 13300428179625 generated at 04/04/2013 12:51:35 needed for

thread 1

ORA-00289: suggestion : +DG/archivelog/arch1_752099890_12809_1.log

ORA-00280: change 13300428179625 for thread 1 is in sequence #12809

Specify log: {<RET>=suggested | filename | AUTO | CANCEL}

auto

ORA-00308: cannot open archived log

'+DG/archivelog/arch1_752099890_12809_1.log'

ORA-17503: ksfdopn:2 Failed to open file

+DG/archivelog/arch1_752099890_12809_1.log

ORA-15173: entry 'arch1_752099890_12809_1.log' does not exist in directory

'archivelog'

ORA-00308: cannot open archived log

'+DG/archivelog/arch1_752099890_12809_1.log'

ORA-17503: ksfdopn:2 Failed to open file

+DG/archivelog/arch1_752099890_12809_1.log

ORA-15173: entry 'arch1_752099890_12809_1.log' does not exist in directory

'archivelog'

ORA-01547: warning: RECOVER succeeded but OPEN RESETLOGS would get error below

ORA-01194: file 1 needs more recovery to be consistent

ORA-01110: data file 1: '+DG/xxxx/datafile/system.256.752099833'

SQL> alter database open resetlogs;

alter database open resetlogs

*

ERROR at line 1:

ORA-01248: file 42 was created in the future of incomplete recovery

ORA-01110: data file 42: '+DG/xxxx/datafile/file_tab_xdidx03.ora'

这个错误还是比较少见,实际上网上那些说法,以及Oracle mos提供的解决方法我发现都不行。

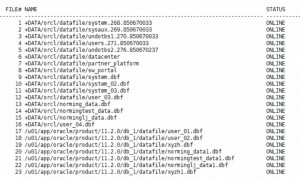

无奈只能先将其offline ,然后再进行恢复。再进行open之前我查询了当前的checkpoint scn如下:

SQL> select file#,checkpoint_change# from v$datafile;

FILE# CHECKPOINT_CHANGE#

---------- -----------------------

1 14731601024328

2 14731601024328

3 14731601024328

4 13300428179625

5 14731601024328

6 14731601024328

7 14731601024328

.......

39 14731601024328

40 14731601024328

41 14731601024328

42 14731601024328

43 14731601024328

43 rows selected.

SQL> c/datafile/datafile_header

1* select file#,checkpoint_change# from v$datafile_header

SQL> /

FILE# CHECKPOINT_CHANGE#

---------- -----------------------

1 14731601024328

2 14731601024328

3 14731601024328

4 13300428179625

5 14731601024328

6 14731601024328

7 14731601024328

......

40 14731601024328

41 14731601024328

42 14731601024328

43 14731601024328

43 rows selected.

由于open失败,这里我想着是不是这2个文件有问题,又用之前的快照控制文件进行recover一把,然后再次用重建的控制文件起来数据库进行recover,发现神奇的事情出现了:

SQL> recover database using backup controlfile until cancel;

ORA-00279: change 13305808683011 generated at 01/11/2016 21:09:02 needed for

thread 1

ORA-00289: suggestion : +DG/archivelog/arch1_900882531_1_1.log

ORA-00280: change 13305808683011 for thread 1 is in sequence #1

Specify log: {<RET>=suggested | filename | AUTO | CANCEL}

auto

ORA-00308: cannot open archived log '+DG/archivelog/arch1_900882531_1_1.log'

ORA-17503: ksfdopn:2 Failed to open file +DG/archivelog/arch1_900882531_1_1.log

ORA-15173: entry 'arch1_900882531_1_1.log' does not exist in directory

'archivelog'

ORA-00308: cannot open archived log '+DG/archivelog/arch1_900882531_1_1.log'

ORA-17503: ksfdopn:2 Failed to open file +DG/archivelog/arch1_900882531_1_1.log

ORA-15173: entry 'arch1_900882531_1_1.log' does not exist in directory

'archivelog'

ORA-01547: warning: RECOVER succeeded but OPEN RESETLOGS would get error below

ORA-01194: file 1 needs more recovery to be consistent

ORA-01110: data file 1: '+DG/xxxx/datafile/system.256.752099833'

SQL> alter database datafile 42 offline;

alter database datafile 43 offline;

Database altered.

SQL>

Database altered.

SQL>

SQL> alter database open resetlogs;

alter database open resetlogs

*

ERROR at line 1:

ORA-01092: ORACLE instance terminated. Disconnection forced

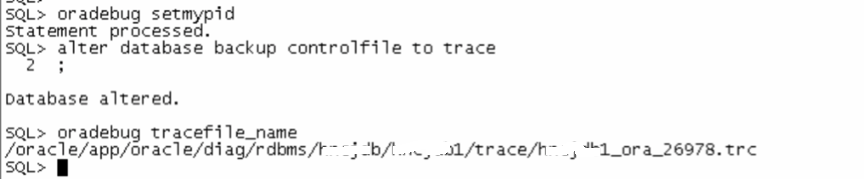

我们可以看到open 失败了,对于open失败的 情况,我们首先是看alert log,接着10046 trace。

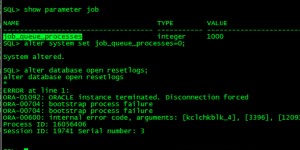

SQL> startup nomount pfile='/tmp/p.ora'; ORACLE instance started. Total System Global Area 2.1475E+10 bytes Fixed Size 2122368 bytes Variable Size 2399145344 bytes Database Buffers 1.9059E+10 bytes Redo Buffers 14651392 bytes SQL> oradebug setmypid Statement processed. SQL> alter database mount; Database altered. SQL> recover database; Media recovery complete. SQL> alter database open; alter database open * ERROR at line 1: ORA-01092: ORACLE instance terminated. Disconnection forced ORA-00704: bootstrap process failure ORA-00704: bootstrap process failure ORA-00604: error occurred at recursive SQL level 1 ORA-01555: snapshot too old: rollback segment number 4 with name "_SYSSMU4$" too small

这里我又屏蔽了undo相关的参数。再次尝试发现错误依旧。再次启动,神奇的事情出现了,SCN居然倒退了?

SQL> startup mount pfile='/tmp/p.ora';

ORACLE instance started.

Total System Global Area 2.1475E+10 bytes

Fixed Size 2122368 bytes

Variable Size 2399145344 bytes

Database Buffers 1.9059E+10 bytes

Redo Buffers 14651392 bytes

Database mounted.

SQL> recover database;

Media recovery complete.

SQL> select checkpoint_change#,file# from v$datafile;

CHECKPOINT_CHANGE# FILE#

--------------------------- ----------

13314398637607 1

13314398637607 2

13314398637607 3

13314398637607 4

13314398637607 5

......

13314398637607 38

13314398637607 39

13314398637607 40

13314398637607 41

0 42

0 43

43 rows selected.

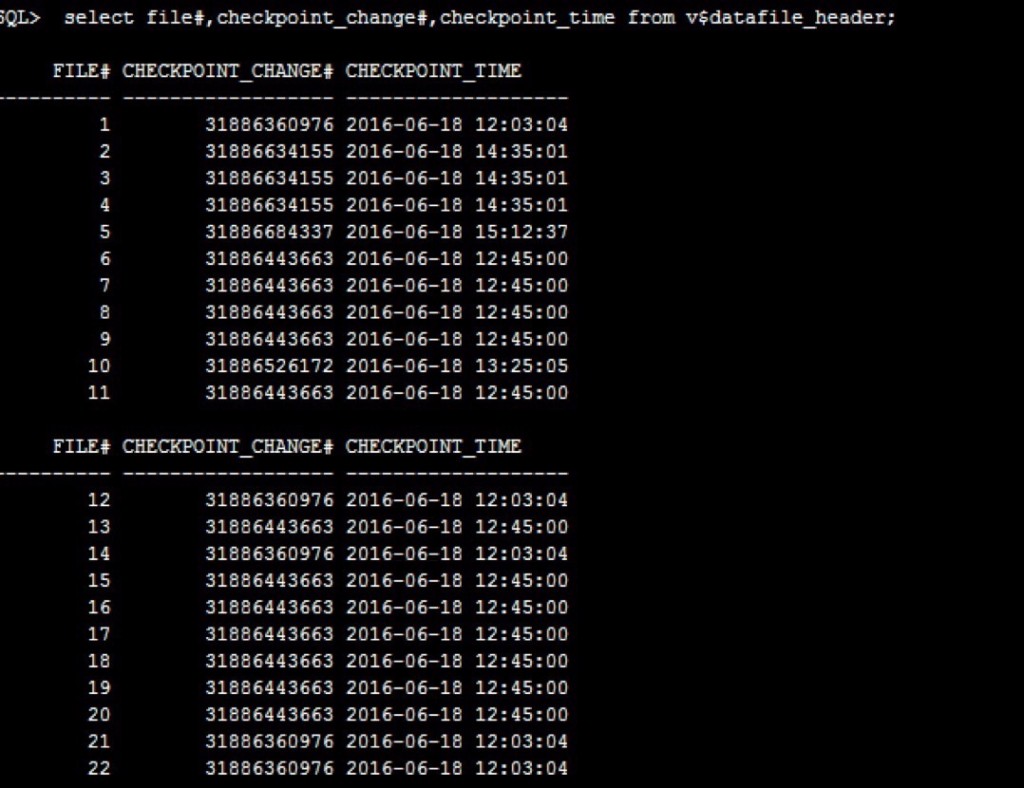

SQL> select checkpoint_change#,file#,checkpoint_time from v$datafile_header;

CHECKPOINT_CHANGE# FILE# CHECKPOIN

--------------------------- ---------- ---------

13314398637607 1 11-JAN-16

13314398637607 2 11-JAN-16

13314398637607 3 11-JAN-16

13314398637607 4 11-JAN-16

13314398637607 5 11-JAN-16

......

13314398637607 39 11-JAN-16

13314398637607 40 11-JAN-16

13314398637607 41 11-JAN-16

14731601024328 42 30-DEC-15

14731601024328 43 30-DEC-15

43 rows selected.

很明显,这个133的scn 回退到了过去2年前了,出现时空穿越了。。。。 当然,open肯定还是报错:

SQL> alter database open; alter database open * ERROR at line 1: ORA-01092: ORACLE instance terminated. Disconnection forced ORA-00704: bootstrap process failure ORA-00704: bootstrap process failure ORA-00604: error occurred at recursive SQL level 1 ORA-01555: snapshot too old: rollback segment number 4 with name "_SYSSMU4$" too small

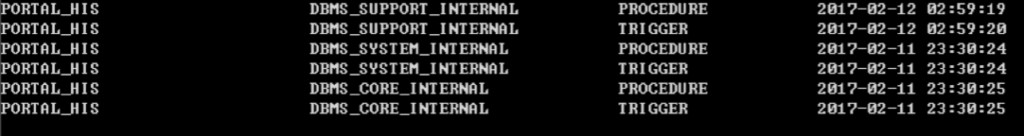

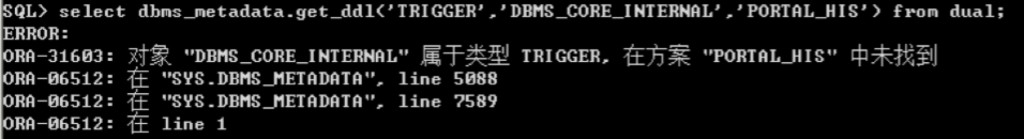

这里先不管为啥连数据文件头的SCN都倒退了(之前被offline的2个文件scn是OK的). 通过10046 trace得到如下内容:

PARSING IN CURSOR #5 len=52 dep=1 uid=0 oct=3 lid=0 tim=1418474357830663 hv=429618617 ad='5db7ea50' select ctime, mtime, stime from obj$ where obj# = :1 END OF STMT PARSE #5:c=0,e=531,p=0,cr=0,cu=0,mis=1,r=0,dep=1,og=4,tim=1418474357830659 BINDS #5: kkscoacd Bind#0 oacdty=02 mxl=22(22) mxlc=00 mal=00 scl=00 pre=00 oacflg=08 fl2=0001 frm=00 csi=00 siz=24 off=0 kxsbbbfp=2ad7172aa020 bln=22 avl=02 flg=05 value=20 EXEC #5:c=1000,e=673,p=0,cr=0,cu=0,mis=1,r=0,dep=1,og=4,tim=1418474357831431 WAIT #5: nam='db file sequential read' ela= 9843 file#=1 block#=218 blocks=1 obj#=-1 tim=1418474357841421 ..... FETCH #6:c=17997,e=64936,p=23,cr=566,cu=0,mis=0,r=1,dep=2,og=3,tim=1418474357907669 STAT #6 id=1 cnt=1 pid=0 pos=1 obj=15 op='TABLE ACCESS BY INDEX ROWID UNDO$ (cr=566 pr=23 pw=0 time=64913 us)' STAT #6 id=2 cnt=1 pid=1 pos=1 obj=34 op='INDEX UNIQUE SCAN I_UNDO1 (cr=1 pr=1 pw=0 time=5769 us)' WAIT #5: nam='db file sequential read' ela= 13031 file#=40 block#=167538 blocks=1 obj#=-1 tim=1418474357920819 FETCH #5:c=19996,e=89548,p=25,cr=568,cu=0,mis=0,r=0,dep=1,og=4,tim=1418474357921006

我们这里可以看到,这里报错的SQL读取了file 1 block 218,以及file 40 block 167538。

对于file 1 block 218,我dump 发现没有活动事务;而file 40 block 167538则为undo 块.

SQL> select name from v$datafile where file#=40; NAME -------------------------------------------------------------------------------- +DG/xxxx/datafile/undotbs4

同时dump 了这个undo 块,发现确实感觉有些异常,如下所示:

******************************************************************************** UNDO BLK: xid: 0x0009.01b.0014320a seq: 0xa47 cnt: 0x1 irb: 0x1 icl: 0x0 flg: 0x0000 Rec Offset Rec Offset Rec Offset Rec Offset Rec Offset --------------------------------------------------------------------------- 0x01 0x0014 *----------------------------- * Rec #0x1 slt: 0x1b objn: 55417(0x0000d879) objd: 296039 tblspc: 20(0x00000014) * Layer: 10 (Index) opc: 21 rci 0x00 Undo type: Regular undo Last buffer split: No Temp Object: No Tablespace Undo: No rdba: 0x0a028e71 *----------------------------- index general undo (branch) operations KTB Redo op: 0x05 ver: 0x01 op: R itc: 2 Itl Xid Uba Flag Lck Scn/Fsc 0x01 0x0009.01b.0014320a 0x0a028e71.0a47.04 ---- 1 fsc 0x0000.00000000 0x02 0x0009.02b.001428b6 0x0a028e6f.0a47.06 ---- 112 fsc 0x0000.00000000 Dump kdige : block dba :0x05d630b3, seghdr dba: 0x06076e89 restore block before image

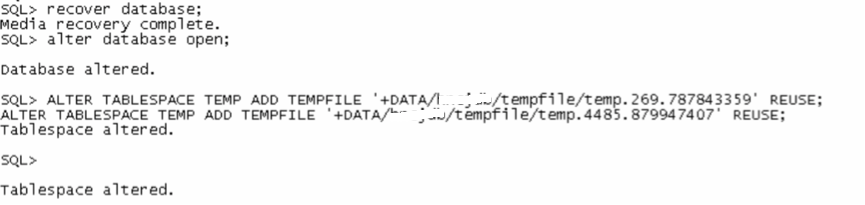

由于所有的文件头SCN 都倒退了,正常open 都报错,只能推进SCN,而且SCN必须要比这个undo block的最大SCN 还要大一些才行,通过在pfile文件中加入参数*._minimum_giga_scn即可。

SQL> conn /as sysdba Connected to an idle instance. SQL> startup mount pfile='/tmp/p.ora'; ORACLE instance started. Total System Global Area 2.1475E+10 bytes Fixed Size 2122368 bytes Variable Size 2399145344 bytes Database Buffers 1.9059E+10 bytes Redo Buffers 14651392 bytes Database mounted. SQL> show parameter job NAME TYPE VALUE ------------------------------------ ----------- ------------------------------ job_queue_processes integer 10 SQL> alter system set job_queue_processes=0; System altered. SQL> alter database open; Database altered. SQL> drop tablespace undotbs3 including contents and datafiles; Tablespace dropped. SQL> drop tablespace undotbs4 including contents and datafiles; Tablespace dropped.

顺利打开数据库之后,立即将原有的undo 表空间进行drop 并重建。

虽然数据库是打开了,然而其中有2个数据文件之前被我们offline了,而且中间进行了resetlogs操作,因此现在无法进行正常online了。

SQL> select file#,checkpoint_change# from v$datafile;

FILE# CHECKPOINT_CHANGE#

---------- ------------------------------

1 14759124431097

2 14759124431097

3 14759124431097

4 14759124431097

5 14759124431097

......

36 14759124431097

37 14759124431097

38 14759124431097

41 14759124431097

42 0

43 0

44 14759124431097

45 14759124431097

43 rows selected.

SQL> alter database datafile 42 online;

alter database datafile 42 online

*

ERROR at line 1:

ORA-01190: control file or data file 42 is from before the last RESETLOGS

ORA-01110: data file 42: '+DG/xxxx/datafile/file_tab_xxx03.ora'

SQL> alter database datafile 43 online;

alter database datafile 43 online

*

ERROR at line 1:

ORA-01190: control file or data file 43 is from before the last RESETLOGS

ORA-01110: data file 43: '+DG/xxxx/datafile/file_tab1_xxx05.ora'

这里用bbed 将上面2个文件头相关信息修改掉,然后进行recover,可以顺利online文件。

SQL> recover datafile 42;

Media recovery complete.

SQL> alter database datafile 42 online;

Database altered.

SQL> recover datafile 43;

Media recovery complete.

SQL> alter database datafile 43 online;

Database altered.

SQL> select file#,checkpoint_change# ,status from v$datafile;

FILE# CHECKPOINT_CHANGE# STATUS

---------- ------------------------------ -------

1 14759124821491 SYSTEM

2 14759124821491 SYSTEM

3 14759124821491 ONLINE

4 14759124821491 ONLINE

5 14759124821491 ONLINE

。。。。。。

36 14759124821491 ONLINE

37 14759124821491 ONLINE

38 14759124821491 ONLINE

41 14759124821491 ONLINE

42 14759124831966 ONLINE

43 14759124832115 ONLINE

44 14759124821491 ONLINE

45 14759124821491 ONLINE

43 rows selected.

SQL> alter system checkpoint;

System altered.

SQL> select file#,checkpoint_change# ,status from v$datafile;

FILE# CHECKPOINT_CHANGE# STATUS

---------- ------------------------------ -------

1 14759124832224 SYSTEM

2 14759124832224 SYSTEM

3 14759124832224 ONLINE

4 14759124832224 ONLINE

5 14759124832224 ONLINE

......

38 14759124832224 ONLINE

41 14759124832224 ONLINE

42 14759124832224 ONLINE

43 14759124832224 ONLINE

44 14759124832224 ONLINE

45 14759124832224 ONLINE

43 rows selected.

最后建议将数据库expdp 导出并重建。到此告一段落!

Related posts: